Python Helps Usher in Bio 2.0

Bio 2.0 is coming, with protein synthesis on-demand. I thought it was in the far future, but then I found this great hands-on article explaining how to do it, right now!

That article blew my mind two ways:

-

You can literally type a sequence of base pairs (e.g., ATCGATTGAGCTCTAGCG) into a text file and a lab can create the protein you specified.

-

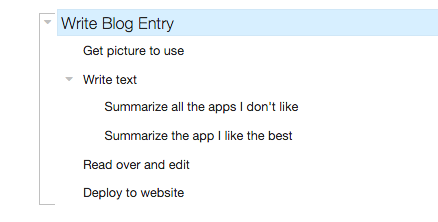

You can completely automate testing. The above article shows how to write a Python program to say exactly how you want your biology experiment conducted. Your experiment can have conditions. The way the above works is ...